Docker Swarm + MACVLAN

A little while ago I created an Ansible playbook that deploys Pi-hole to a docker swarm in order to create a redundant self-hosted ad blocking DNS service. It's been working great but two issues have come up as a result of running the service in a swarm.

- Clients were not reported properly to Pi-hole

- Updating configuration became hit or miss due to swarm's routing mesh

In this post I'll get into what's happening and how to use MACVLAN routing to resolve both issues.

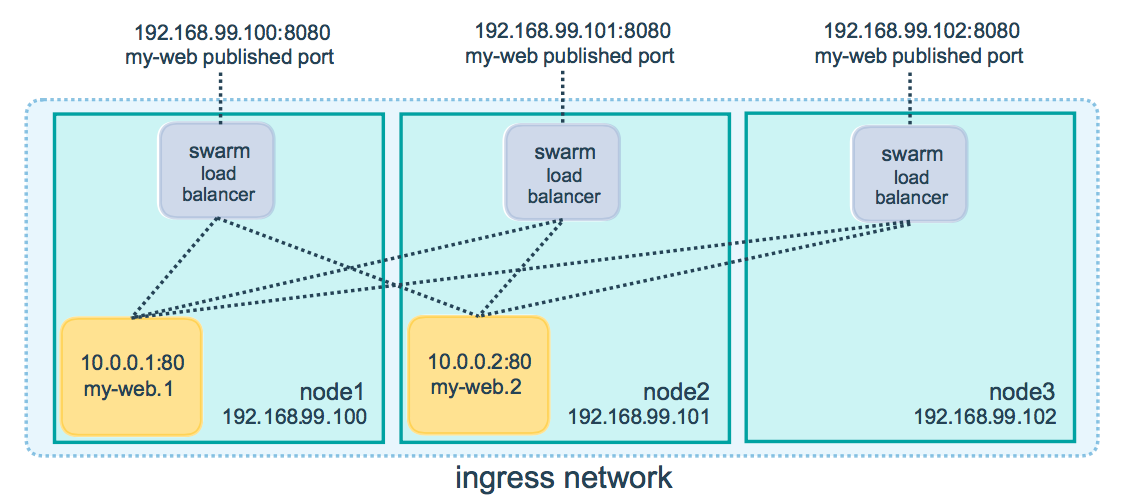

If we start with the second issue it'll be easier to explain the first and as the old adage goes, "A picture is worth a thousand words", so let's start by looking at what typically goes on when you make a request to a swarm service.

Basically what this illustrates is that when you make a request to a replicated swarm service, there is no guarantee as to which instance answers the request. So while I may make all my requests to node1, swarm's load balancer might send that request to the instance on node2. In our case this can create an issue when trying to configure Pi-hole where the request to update the configuration does not get sent to the same instance that served the page, resulting in a security token mismatch.

This highlights the first issue where clients making DNS queries were not being reported correctly in the Pi-hole admin console, I was only seeing 2 clients with 10.0.0.x ip addresses instead of all the devices on my network. The 2 "clients" were the swarm load balancers that accept the DNS request, decide where to send it, and pass it along.

So the problem lies within swarm's routing mesh and the way to resolve both of our issues is to circumvent it with a MACVLAN network. Essentially what this will do is allow each Pi-hole instance to get it's own IP on our main subnet. This will allow us to communicate directly with each instance individually. Let's get started!

First we'll create a network configuration on each node that will host our service. The config defines the IP address the container deployed to that node will use.

docker network create --config-only --subnet 192.168.1.0/24 -o parent=eth0 --ip-range 192.168.1.150/32 mvl-config

Execute the above command on each node, updating the --subnet and -o parent params as needed. Notice that --ip-range is a /32 cidr address, this limits the range to a single ip. You'll need to increment this per node, making sure not to duplicate IPs on or that may be provisioned on your network. Swarm's IPAM (IP address managment) is supposed to handle this for us, but in my experience it doesn't work, maybe I'm not doing something right, let me know!

Next we'll create a swarm scoped network that implements the configuration we just created, run the following on the manager node:

docker network create -d macvlan --scope swarm --attachable --config-from mvl-config mvl

Finally we deploy our service and specify the network created in the last step:

docker service create --replicas 2 --name mvl-net-example --network mvl busybox:latest sleep 3600

Run docker network inspect mvl on each node and you'll see each instance has it's expected IP. While this service just spins up busyboxes that don't do anything, you can easily adapt it to your services.

Now that we're communicating to each instance directly, client ip's are no longer lost in the routing mesh and Pi-hole reporting works! It also means configuration update requests POST to the same instance that served them, avoiding errors. Since the solution also implements GlusterFS backed mount points, any configuration update is replicated to the other nodes.

I'm still deciding the best way to implement this into my playbook but look for an update, hopefully soon. Hope this helped!